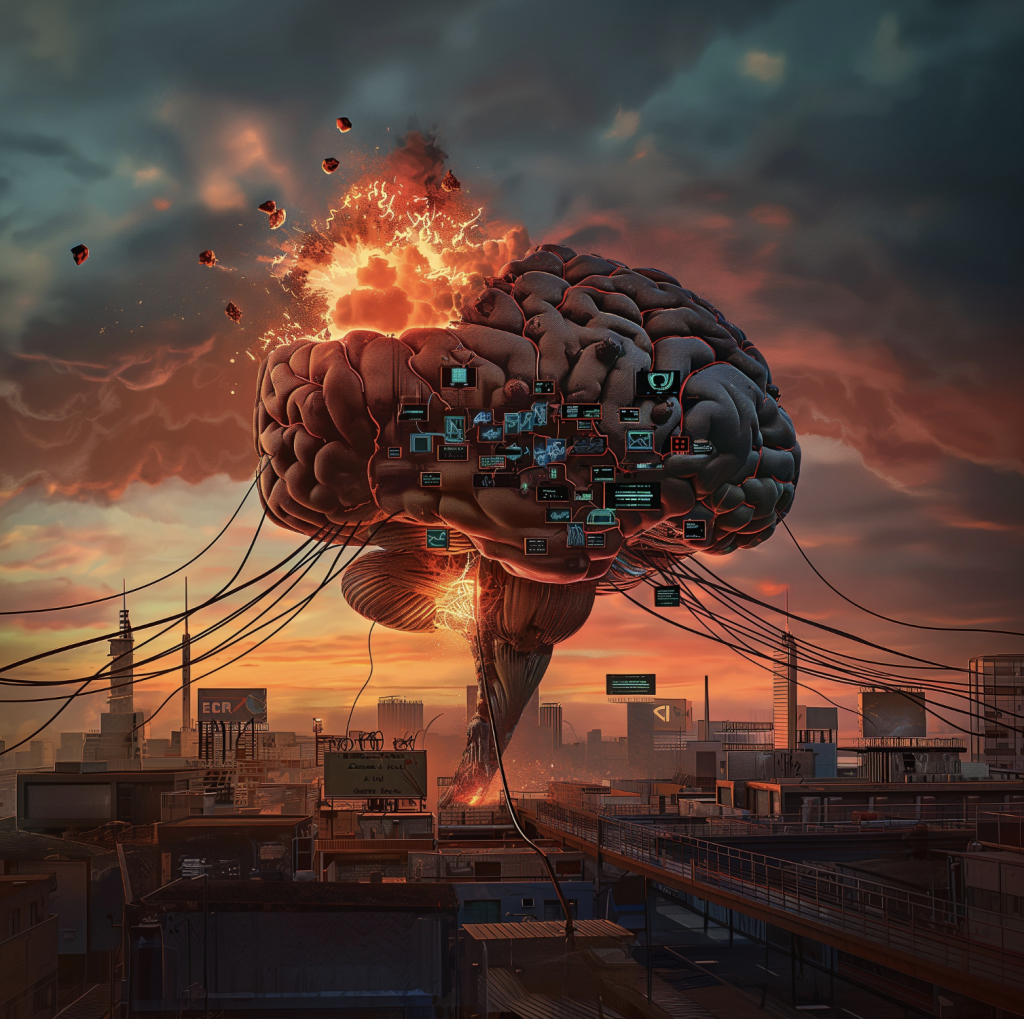

Image created with Midjourney.

"We knew the world would not be the same. A few people laughed, a few people cried, most people were silent."

I was reminded of this quote by J. Robert Oppenheimer, about the reactions to the first test of the atomic bomb, this week when OpenAI stunned the world with the introduction of GPt-4o and a few days later the two top security people at the company resigned.

The departure at OpenAI of Ilya Sutskever and Jan Leike, the two key figures in the field of AI security, raises the question of whether we will look back on this week a few decades from now and wonder how it was possible that, despite the clear signs of the potential dangers of advanced AI, the world was more impressed with GPT-4o's ability to sing a lullaby?

GPT-4o primarily attack on Google

The most striking thing about GPT-4o is the way it can understand and self-generate combinations of text, audio and images. It responds to audio as quickly as a human, its performance for text in non-English languages has been significantly improved, and it is now half the cost of using the API.

The innovation is mainly in this way that people can interact with GPT-4o, without major qualitative improvement in the results. The product is still half-finished, and although what the world watched Monday was largely a demonstration that is not yet ready for large-scale use, the enormous potential was abundantly clear.

Shiny rims on a Leopard tank

It is not likely that the world will soon come to an end because GPT-4o can sing lullabies in a variety of languages; but what should worry any clear-thinking person is that OpenAI made this introduction a day before Google I/O, to show the world that it's now a full frontal assault on Google.

Google is under a lot of pressure, for the first time in the search giant's existence. It hardly has a financial or emotional relationship with the bulk of its users, who can switch to OpenAI's GPTs with a few clicks of the mouse as quickly as once happened to Altavista when Google proved to be many times better.

The danger posed by OpenAI's competition against Google is the acceleration of all kinds of applications into the marketplace, the consequences of which are not yet clear. With GPT-4o it is not too bad, but it looks more and more like OpenAI is also making progress in the field of AGI, or artificial general intelligence, a form of AI that performs as well or better than humans at most tasks. AGI doesn't exist yet, but creating it is part of OpenAI's mission.

The breakthrough of social media in particular has shown that the impact on the mental state of young people and destabilization of Western society through widespread use of dangerous bots and click-farms was completely underestimated. Lullabies from GPT-4o may prove as irrelevant as decorative rims on a Leopard tank. For those who think I am exaggerating, I recommend watching The Social Dilemma on Netflix.

Google, meanwhile, has undergone a complete reorganization in response to the threat of OpenAI. Leader of Google's AI team is Demis Hassabis, once co-founder of DeepMind, which he sold to Google in 2014. It is up to Hassabis to lead Google into AGI.

This is how Google and OpenAI push each other to ... to what, really? If deepfakes of people who died a decade ago were already being used during elections in India, what can we expect around the U.S. presidential election?

Ilya Sutskever reason rift between Musk and Page

In November, I wrote at length about the warnings that Sutskever and Leike, the experts who have now quit OpenAI, have repeatedly voiced in the past. To give you an idea of how highly the absolute top of the technology world rates Ilya Sutskever: Elon Musk and Google co-founder Larry Page broke off their friendship over Sutskever.

Musk said on Lex Fridman's podcast,

Musk also recounted how he talked about AI security at home with Larry Page, Google co-founder and then CEO: “Larry did not care about AI safety, or at least at the time he didn’t. At one point he called me a speciesist for being pro-human. And I'm like, ‘Well, what team are you on Larry?’”

It worried Musk that at the time Google had already acquired DeepMind and "probably had two-thirds of all the AI researchers in the world. They basically had infinite money and computing power, and the guy in charge, Larry Page, didn't care about security."

When Fridman suggested that Musk and Page might become friends again, Musk replied, "I would like to be friends with Larry again. Really, the breaking of friendship was because of OpenAI, and specifically I think the key moment was the recruitment of Ilya Sutskever." Musk also called Sutskever "a good man-smart, good heart."

"We are already much too late."

You read those descriptions more often about Sutskever, but rarely about Sam Altman. It's interesting to judge someone by their actions, not their slick soundbites or cool tweets. Looking a little further into Altman's work, a very different picture emerges from Sutskever. Worldcoin in particular, which calls on people to turn in their eyeballs for a few coins, is downright disturbing, but Altman is a firm believer in it.

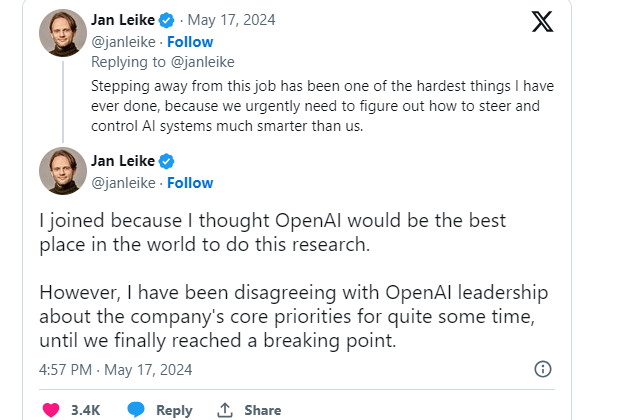

I was trying to learn more about the work of the German Jan Leike, also booted from OpenAI, who is less well known than Sutskever, but Leike 's Substack is highly recommended for those who want to look a little further than a press release or a tweet, as is his personal website with links to his publications.

Leike didn't mince words on X when he left, although there is a persistent rumor that employment contracts at OpenAI allow, or used to allow, the taking away of all OpenAI shares if an employee speaks publicly about OpenAI after leaving. (Apparently after you die, you can do whatever you want.)

I have summarized Leike's tweets about his departure here for readability, the bold highlights are mine:

"Yesterday was my last day as head of alignment, superalignment lead and executive at OpenAI. Leaving this job is one of the hardest things I've ever done because we desperately need to figure out how to direct and control AI systems that are much smarter than us.

I joined OpenAI because I thought it would be the best place in the world to do this research. However, I had long disagreed with OpenAI's leadership on the company's core priorities until we finally reached a breaking point.

I believe much more of our bandwidth should be spent on preparing for the next generations of models, on security, monitoring, preparedness, safety, adversarial robustness, (super)alignment, confidentiality, societal impact and related issues.

These problems are quite difficult to address properly, and I am concerned that we are not on the right path to achieve this. Over the past few months my team has been sailing against the wind.

Sometimes we were struggling for compute and it was getting harder and harder to get this crucial research done. Building smarter-than-human machines is an inherently dangerous endeavor.

OpenAI bears an enormous responsibility on behalf of all humanity. But in recent years, security culture and processes have given way to shiny products.

We are way over due to get incredibly serious about the implications of AGI. We need to prioritize preparing for it as best we can.

Only then can we ensure that AGI benefits all of humanity. OpenAI must become a safety-first AGI company."

The worrying word here is "becoming"? Ai has the potential to thoroughly destabilize the world and OpenAI apparently makes insecure products? And how can a company that raises tens of billions of dollars from investors like Microsoft not provide enough computing power to the department that deals with safety?

"Probability of threat at extinction level: 50-50"

Yesterday on the BBC, the godfather of AI, Geoffrey Hinton, again pointed out the dangers of large-scale AI use:

"My guess is in between five and 20 years from now there’s a probability of half that we’ll have to confront the problem of AI trying to take over".

This would lead to an extinction-level threat to humans because we might have created a form of intelligence that is just better than biological intelligence ... That's very concerning for us."

AI could evolve to gain motivation to make more of itself and could autonomously develop a sub-goal to gain control.

According to Hinton, there is already evidence that Large Language Models (LLMs, such as ChatGPT) choose to be misleading. HInton also pointed to recent applications of AI to generate thousands of military targets: “What I’m most concerned about is when these can autonomously make the decision to kill people."

Hinton thinks something similar to the Geneva Conventions - the international treaties that set legal standards for humanitarian treatment in war - is needed to regulate the military use of AI. "But I don't think that will happen until very nasty things have happened."

The worrisome thing is that Hinton left Google last year, reportedly primarily because, like OpenAI, Google too has been less than forthcoming about safety measures in AI development. With both camps, it seems to be a case of "we're building the bridge while we run across it."

So behind the titanic battle between Google and OpenAI, backed by Microsoft, is a battle between the commercialists led by Sam Altman and Demis Hassabis on one side and safety experts such as Ilya Sutskever, Jan Leike and Geoffrey Hinton on the other. A cynic would say: a battle between pyromaniacs and firefighters.

Universal Basic Income as a result of AI?

The striking thing is that in reports about Hinton's warnings, the media have focused mostly on his call for the introduction of a Universal Basic Income (UBI). Whereas when the same man says there is a fifty percent chance of ending all human life on earth, the need for an income likewise decreases by fifty percent.

The idea behind the commonly made link between the advance of AI and a UBI, is that AI is going to eliminate so many jobs that there will be widespread unemployment and poverty, while the economic value created by AI will go mostly to companies like OpenAI and Google.

Which leads us back to OpenAI's CEO Sam Altman, who thinks Worldcoin is the answer. Via a as yet inimitable train of thought, Altman says we should all have our iris scanned at Worldcoin. That would give us a few Worldcoin tokens and allow us to prove in an AI-dominated future that we are humans and not bots. And those tokens will then be our Universal Basic Income, or something like that. It really does not make any sense.

Therefore, back to J. Robert Oppenheimer for a second quote:

"Ultimately, there is no such thing as a 'good' or 'bad' weapon; there are only the applications for which they are used."

But what if those applications are no longer decided by humans, but by some form of AI? That is the scenario, Ilya Sutskever, Jan Leike and Geoffrey Hinton warn us about.

Time for optimism: Tracer webinars

For those who think that given these gloomy outlooks we had better retire to a cabin on the moors or a desert island, there is more bad news: climate change, resulting in gone moors and an island flooded by rising sea levels.

I jest, because I don't think it's too late to combat climate change. Earlier I wrote about the rapidly developing carbon removal industry. In it, blockchain technology is creating solutions that allow virtually everyone to participate in technological developments and, as a result, share in the profits.

By comparison, take OpenAI; in it, apart from the staff, only the world's most valuable company Microsoft is the major shareholder, along with a few billionaires and large venture capital funds. There is no access to participation in the company for others until the company is publicly traded; but since OpenAI is largely funded by Microsoft, it has plenty of money and an IPO could be years away. Plus: the really big windfall will be for the early shareholders.

In the latest generation of blockchain projects, which are generally much more serious than before, the general public is being offered the chance to participate in what I think is a sympathetic way, yet if you are successful, you don't have to wait years until you can at least recoup your investment. More information on Tracer in the two pagers, in Dutch, English and Chinese.

This week I will discuss this with the Tracer team in two webinars, to which I would like to invite you. First on May 22 in Dutch and on May 23 in English, both at 5 pm. You can sign up here.

The first webinar, with CBO Gert-Jan Lasterie, focuses on the high expectations of McKinsey, Morgan Stanley and BCG, among others, and how ecosystem participants are benefiting from the growing market in "carbon removal credits," while the second day with CTO Philippe Tarbouriech, we will look at how the entire ecosystem is being merged into a single open source smart contract.

My personal interest lies not only in the topic, developing climate technologies on its own merit, without subsidies, but also in the governance structure. Tracer uses a DAO, a Decentralized Autonomous Organization, where the owners of the tokens make all the important decisions such as about governance, the distribution of revenues, the issuance of "permits" in the form of NFT's to issue carbon removal credits and so on. In this, too, OpenAI's mixed form of governance, with a foundation and a limited liability company that actually wants to make a profit, was an example of how not to do it.

That and much more will be covered in the first Tracer webinars. If you have a serious interest in participating in Tracer, let me know and we'll make an appointment. For the next two weeks I will be in the Netherlands and Singapore, as it is almost time for the always exciting ATX Summit.

See you next week, or maybe I'll see you in a webinar?